Heather Penney explores national security artificial intelligence applications and considerations with Sean Moriarty and Leonard Law of Primer, a leading AI company. We explore how AI can help improve U.S. defense capabilities, discuss how vulnerabilities and challenges can be managed, while also addressing market conditions required to transition this technology to reality. How do we assure quality data and security of the AI tool and its outputs? How do we avoid ingesting bad data without ignoring emerging battlespace information in the battlespace? And perhaps most importantly, how do we generate trust between warfighters and AI? All of this must be generated at the pace of battlespace relevance.

Guests

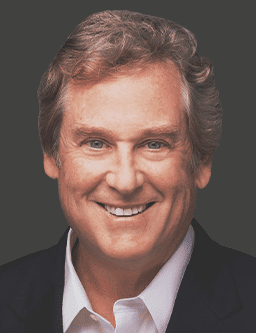

Sean MoriartyChief Executive Officer, Primer Technologies

Sean MoriartyChief Executive Officer, Primer Technologies Leonard LawChief Product Officer, Primer Technologies

Leonard LawChief Product Officer, Primer TechnologiesHost

Heather PenneyDirector of Research, The Mitchell Institute for Aerospace Studies

Heather PenneyDirector of Research, The Mitchell Institute for Aerospace StudiesTranscript

Heather “Lucky” Penney: [00:00:00] Welcome to the Aerospace Advantage Podcast, brought to you by PenFed. I’m your host, Heather “Lucky” Penney. Here on the Aerospace Advantage, we speak with leaders in the DOD, industry, and other subject matter experts to explore the intersection of strategy, operational concepts, technology and policy when it comes to air and space power.

So today we’ve got a special treat. Senior leaders from the AI company, Primer. They’re joining us to talk about the industry perspective when it comes to AI and aerospace power. We can dream up cool applications all day long, but it takes real engineers and companies to deliver on those visions. Somebody’s got to build it, but more often than not, nowadays, industries moving faster than the Department of Defense. Civil companies can move at the speed of market, not defense bureaucracy. They’re not slowed down by the defense acquisition process or told exactly what and how to build it. Instead, they see a need in the market, they see a capability gap, a problem, and they have to jump faster than their competition to get there and deliver.

So if you [00:01:00] think about it, capitalism shares a lot of the innovation dynamics in competition that the military faces. So with that, I’d like to introduce Sean Moriarty and Leonard Law. Sean is the CEO of primer, and his experience ranges from being the chief executive officer of companies such as leaf Group and Ticketmaster, and the being the chairman of Metacloud and so many others.

Sean, it’s great to have you here.

Sean Moriarty: Thanks so much for having us. We’re thrilled to be here and looking forward to the conversation.

Heather “Lucky” Penney: Thank you. And Leonard Law is the Chief Product Officer at Primer. Welcome to the podcast, Leonard.

Leonard Law: Thanks for having us.

Heather “Lucky” Penney: So, gentlemen, to start things off, please introduce Primer for our listeners. The AI market is really active right now. So, where does Primer fit into the mix and what is your differentiator? We’d like to help our listeners get to know you better and how you connect to the war fighter. And I think a huge piece of this is understanding the kind of AI that you bring to bear.

Sean Moriarty: Sure. So, at Primer, we originally incubated out of INQTEL. [00:02:00] Our bread and butter is to make sense out of massive amounts of unstructured data, in near real time or certainly as close as we can get to that. And at our core we’ve got deep skills and experience in natural language processing and we integrate models, both proprietary models, which we’ve developed.

And, the publicly available LLMs to bring speed, power, and accuracy, to analysis for the customer. We’re an enterprise software product shop at our core, and we work backwards from mission need. Which means that when we build our solutions, we’re really thinking through that end user experience and mission set.

And the goal really is to allow people to be much more effective in their jobs. And if you think about the personas that we serve, analysts, operators, targeters researchers, leaders. They’re trying to make sense of a world [00:03:00] around them that is moving very, very rapidly. They’re inundated with data, whether it’s proprietary data sources or open source, publicly available information, and they need to be able to make sense of that and make decisions that they can have confidence in, in that basis.

And so we build the tooling to allow people to achieve that.

Heather “Lucky” Penney: I think it’s really important that you emphasize that you’re there to really enhance the end user experience and their ability to make decisions. You’re not trying to replace those end users because what you bring to the table, being able to really access all this unstructured data, which we’ll talk about a little bit, define that for our listeners, a little bit more and be able to make sense of that so that we can improve our decision processes.

That’s fascinating. Leonard, what’s your perspective?

Leonard Law: Yeah, I think that’s right. We’re here to supercharge those analysts and as Sean said, our focus is really on building practical, trustworthy AI. That helps provide speed, power, and [00:04:00] accuracy to those mission aligned workflows that our customers are trying to perform. And so really we are trying to work very closely to understand their trade craft, to make sure that we are building AI that is aligned to their workflows.

Heather “Lucky” Penney: Yeah. You’re not replacing people, you’re making them better. And I think that really gets to what the true value proposition of AI is enhancing human performance and enhancing mission outcomes. And I think, a lot of folks, when they think about AI, they get confused. They think, oh, these things are gonna create robots, or they’re gonna replace humans, they’re gonna replace human cognition, and they’re not coming at it the right way. I really like your approach there.

Sean Moriarty: One of the things that’s really important to us is that’s exactly the point, which is if you look at the preconditions for someone to do their job, it is often not value add in a strategic sense. It’s necessary. So, oh my gosh, I’ve got all these sources of data, I’ve gotta figure out how to structure it, to collate it, to look for patterns.

And if you’re doing that via manual inspection, it takes forever. And what we really wanna be able to do is take our software and [00:05:00] effectively do all of the heavy lifting that is non-strategic, right? So, that that person can actually bring their trade, craft, their creativity, their knowledge, and their imagination to bear from the very beginning. One of the things we say for the customer is, we want you to be able to start your day where you used to end it.

Heather “Lucky” Penney: That is, that is a great a great tagline. But actually something that matters and is really meaningful for the people who are actually doing the JOB. you’ve mentioned unstructured, as have I a couple different times. Can we just take a quick break and define what you mean by unstructured data and why your ability to really integrate that and make meaning out of it is so important and part is part of your magic sauce, Leonard?

Leonard Law: Yeah, so when we talk about instructured data, what we’re talking about is sort of the universe of human readable sort of document or narrative data. So this can come in the form of human intelligence reports. This can come in the form of [00:06:00] publicly available information like news and social media. This can come in the form of things like NOTAMs or after action reports. And so we are working through all the vast quantities of data that is sort of dusing kind of our customers today. And really try trying to help them to find the insights varied within all that unstructured data. So we’re not really working with things like databases and tables, but really focusing on sort of those narrative structures that have a lot of hitting meaning. That’s often hard to uncover for our human analysts and operators.

Heather “Lucky” Penney: And hard to uncover for human analysts and operators because they just have, they end up drowning in the data because the reports just get stacked so high and their human cognition has the ability to make meaning outta the words and the information that they’re getting. It’s just too much though, right?

What a lot of other AI approaches require is for that data to then be cleaned and curated so it has the same format for them to be able to use. So that’s one of the reasons why this unstructured, all the information you have is fairly weird. It’s all a little bit different. It’s cats and dogs, [00:07:00] but you, that your ability to still make meaning out of that is, I think something that’s very unique to what you bring to the table.

Sean Moriarty: One of the things I’ll call out. So imagine a world where someone is getting inputs from several thousand different disparate sources. But they have very good understanding of what they’re actually looking for within that. We can, in a matter of seconds, parse all of that for them, surface all of the documents that are relevant. We can produce a summary of those documents, and that customer always is only one click away from the original source. So they understand provenance, they have the ability to visually inspect and assess that primary source as they go. So you’re getting the benefit of the speed of the machine. You’re getting that summary for you, but you’re also never far from the document that formed, that you’re always one click away.

Heather “Lucky” Penney: That’s amazing because that really allows us, as you mentioned, through the provenance, to be able to [00:08:00] either dig a little bit deeper, get additional context, and most importantly, avoid hallucinations.

Sean Moriarty: On the hallucination part we’ve done a lot of work. We’ve got a proprietary implementation, built off of RAG, which we refer to as Rag V. We’re, effectively what we’re doing is running that generative output, back through the system to test and assess claims and what that allows us to do for the customer is to make sure that what we’re producing has a very high accuracy rate, and anything that’s produced that is contested is gonna actually go back through that system so it effectively can be adjudicated. More importantly, it’s actually flagged for them so they actually can see where there’s any sort of conflict or where, in fact the machine may have produced something that’s inconsistent with a reference dataset.

Heather “Lucky” Penney: That’s very interesting and very important when we begin to talk about intelligence and intelligence analysis. Which brings me back to the [00:09:00] genesis of primer from INQTEL and Sean, you had mentioned this a little bit earlier, but INQTEL is basically a nonprofit venture-backed technology incubator for the intelligence community. Or IC For those that like to sound like insiders.

The concept behind INQTEL was in 1999, the CIA and the rest of the intelligence community realized that Silicon Valley was moving really fast and basically had much better tools than the Intel community did. So INQTEL put the IC back in the lead when it came to advanced technology and nowadays that speed and service is core to your origin story and core to your ethos. Do you wanna talk more about that and how that’s connected you, not just broadly to the IC, but to the war fighter and some of the projects that you’ve already fielded?

Sean Moriarty: Yeah. So, I think the IC astutely realized, to your point, that the rapid pace of innovation in Silicon Valley and that it was going to be important to see these, high potential, best of breed companies that were working [00:10:00] on problems that were very well suited for many of the challenges that the IC was grappling with. It certainly, you know, if you think about the massive amount of unstructured data in the world. And then if you think about the explosion of that data with, for example, the rise of social media and news sources, and also increasingly the knowledge that there was extraordinary value in OSINT and, you know, you had an intelligence community for decades that was relying substantially on, proprietary information. I think companies like Primer are really important in solving that problem. And certainly, you know, the analyst world has changed dramatically because of that explosion of data.

Our approach has been within the IC is to really get an understanding, again, of that end user and the challenge is that they’re grappling with and always working backwards from user need. And we’re a product company, not a service company. We provide a very high level of service, [00:11:00] including where appropriate working side by side with the customer and whatever environment they’re operating in.

But the true north is to be informed by customer need, but to productize that. To build a platform and build extensible products that can solve those needs as they evolve. And that’s critical because, the world we’re in is changing almost by the year, whether that’s the capabilities of large language models. Or new needs that are coming to the customer on the basis of what’s happening. If you go back, you know, a decade ago your social media consumption and the way these platforms were used is very different than it is today. Now there are sources where nation state actors or folks working on their behalf, can effectively use these channels for messaging. They can use these channels to effectively wage, you know, asymmetric communications warfare and being able to understand those things in real time is [00:12:00] increasingly critical. And so we really work to provide the tooling so that people can not only understand the world around them, but who’s seeking to influence narratives across the globe and how that impacts global security and national security.

Heather “Lucky” Penney: Fascinating. Let’s pivot from the IC and talk about how you think your products could be used for the Air Force and Space Force.

Sean Moriarty: Sure. So if you think about the vast amount of data that is relevant to those mission sets and also the fact that Space Force is newly stood up, they’re gonna be grappling with massive amounts of information. Whether that’s related to near peer competitors and adversaries. If you think about, one of the big issues and a use case we’re working on right now, is that counter UAS mission set, you got a lot of stuff in the air. And understanding what is where. Everything from what a particular, drone type is in the [00:13:00] air? What’s its payload? How long can it remain in flight? What’s the activity not only over a timeline, but against a map, you know, where are we seeing increasing or decreasing activity? What might that mean? Collating and synthesizing that data is a really tough task, but you can’t achieve situational awareness without it. And we have the ability to provide tremendous insights very quickly in this emerging world.

Heather “Lucky” Penney: So that’s fascinating. Now, counter UAS is a huge priority for the Air Force. What exactly are you doing in that domain?

Sean Moriarty: We’re helping to shift the paradigm in the counter UAS mission by moving us from reactive posture to a more proactive left of launch approach. We’ve all seen how drone threats are evolving rapidly, especially in conflict zones like Ukraine and Israel. These regions have become testing grounds for new drone tactics from swarm coordination and kamikaze UAVs, to improved payloads and evasion techniques. And increasingly we’re seeing the use of DIY builds and modified commercial drones, which are [00:14:00] cheap, adaptable, and very hard to track. There’s a wealth of real world data out there that national security and military stakeholders can learn from if they have the right tools, and that’s where we come in. We fuse open source intelligence, like social media, news and technical forums with proprietary reporting streams to provide a full spectrum view of UAS threats as they emerge. Our natural language processing technology constantly ingests and synthesizes unstructured data to uncover emerging tactics, techniques and procedures, spoofing tactics, payload, innovation, UAV platform modifications, evasion patterns. This isn’t just about intelligence, though. It’s about gaining operational advantage. By automating discovery and minimizing manual review, we shift the burden from data collection and manual triage to actual intelligence. Enabling C-UAS professionals to spend less time sifting through information and more time delivering insights that drive interdiction and policy decisions. [00:15:00] We equip agencies to proactively identify emerging behaviors, assess capabilities like endurance and payload class, and recommend effective countermeasures. Whether it’s securing borders, providing critical infrastructure, or staying ahead of drone enabled smuggling and espionage, we help mission stakeholders stay left to launch and stay ahead.

Heather “Lucky” Penney: this capability sounds really important for battle managers, for mission commanders, for component commanders and planners too, who are looking to optimize what they’re gonna do to maximize their mission effect in real time as well as for the next go. So there’s lots of different tasks that AI can do and for different missions, and those algorithms have to be specialized for their specific tasks. So these tools are fairly specialized and although one can knit together different algorithms to execute more complex tasks, so they’re stacking algorithms, we really should avoid talking about AI as a catchall term. So what do you see as your sweet spot within this AI [00:16:00] world?

Sean Moriarty: I think the sweet spot for us is again, going back to understanding that customer need with respect to discovery and analysis. Building that end-to-end tooling, which is tradecraft aware. Which is how do people work every day? What are their existing workflows? Uh, you know, I don’t think that any, whether it’s an analyst, an operator, a targeter, or a researcher. They need another narrow cast tool in their portfolio. And what they really need is software that can actually take them from beginning to end and or plug into existing systems and platforms. Right? If you think across, you know, the Department of Defense or the ic, you’re talking about deploying into legacy environments and platforms that people are working with every single day and, you know, being able to deploy into existing platforms or standing up new is an evaluation we make really driven by what is going [00:17:00] to be the most powerful implementation for the user to be more effective in their job? I would call out. You know, again, we are model agnostic. We recognize that depending on the use cases, there are gonna be various, models that are more less suited to a particular task. And that can be informed by speed, precision, accuracy, but also total cost of ownership could also be the environment you’re deploying into, which is someone may be operating in an environment that is compute poor and they need as much speed, power, and accuracy as they can get in that environment.

You may be able to achieve that with a smaller model that’s actually operating on a laptop or a laptop equivalent device inform factor. And so, you know, that really infor, those really inform our design principles. I would say modularity. The ability to deploy in any environment and deliver maximal signal from noise within that environment and giving the customer the ability to either work within their existing [00:18:00] platform, use our software de novo on a standalone basis, and then also be able to tailor the models that they’re leveraging to the tasks they’re trying to achieve.

Leonard Law: Perhaps to add a little bit of specificity as well, in terms of our sweet spot in AI, you know, Primer has a legacy sort of in this NLP space, and so increasingly we’ve used both proprietary and homegrown NLP models, but increasingly some of the more advanced open source and proprietary LLMs to do exactly what Sean said, right?

Which is to again, help our users to find and understand insights, within this sort of vast troves of data that are out there. And ultimately use those for mission effectiveness. And so that’s really our goal.

Heather “Lucky” Penney: So Leonard, it sounds like the products that you’re delivering are, first of all they’re bespoke to what that individual user is doing from the beginning to end.

‘Because you’re looking at their entire trade craft and what they do through the course of the day to be able to achieve the particular outcomes that they’re interested in. But what was fascinating about what Sean had said [00:19:00] was how it’s not as if you’re just one more thing that a human is gonna have to work with.

And they’re balancing a bunch of different new AI tools that you can actually integrate and glue those tools to together, to streamline the workflows, to improve mission outcomes, improve decisions, and accelerate those decisions as well. And that you actually take into consideration the context and environment that they’re operating in. That’s really unique.

Sean Moriarty: The capabilities we build out, for example, are deployable and capable of being leveraged, via very robust API. And so, again, you know, the ability to deploy into an existing platform in the right place, within their workflows, not just the environment, but the workflows themselves is critical.

Right? Our job is to make it easier for people to get their job done. And in many cases, that requires meeting them exactly where they are. If they’re spending their time on a particular platform and they need our capability within it, the best thing for them often is for us to [00:20:00] deploy directly into that.

Heather “Lucky” Penney: Yeah, I know. The last thing anybody wants to do is to have to learn one more platform, one more tool, and one more password and login. Right? So in this whole world of, AI ML. Oftentimes when I hear people talking about using these tools is they say, sense, make sense, act. The makes sense piece is huge. That’s a major element of your value proposition because situational awareness is everything. But I’m gonna be a little old school. I’m gonna go back to John Boyd’s OODA loop and call this orientation. And the main reason why is that we still have to decide after we make sense.

We don’t wanna act before we make a decision ready, fire, aim. So, I’m gonna be old school, as I said, and stick with the OODA loop paradigm because we can’t make good decisions if we don’t have good make sense orientation or situational awareness SA as fighter pilots would say. So how do you use AI to make good situational awareness so that the user can then make good decisions and [00:21:00] therefore take good actions?

And how should they assess the types of actions that they’ll take? Because of course, that’s always gonna have some level of trade off. For example, in the tactical realm, we’ll sometimes sub-optimize our maneuvering or our positioning or our energy management because we’re thinking about preserving options for follow on actions. So, I know the real magic happens in the actual mass, so I’m not asking you to divulge any real secrets here, but what do you think is foundational to building that orientation or that sense making?

Sean Moriarty: One of the things that I’d call out and I’d like Leonard to elaborate a bit is, you know, because again, we’re working with primary sources and the end user always has the ability to go back to the original source information quality matters an awful lot, right? So they’re gonna be looking at something on the screen as a consequence of doing a search or filtering from search results.

And then they have the ability to [00:22:00] assess the quality of that information. We can also do some work to give them a sense for what we believe the quality of that information is, you know, based on, historical source quality or the extent to which it is consistent with or in conflict with other sources.

So, a lot of it is information provenance and quality assessment prior to making a decision. At this point I’ll kick it over still, Leonard, to elaborate a bit.

Leonard Law: Yeah. So as Sean said, we are living in a information rich and information dense environment and really what we’re trying to do is help our users to understand insights buried within that information that may be coming from different perspectives is sometimes contradictory perspectives. And so our ability to allow users to kind of search and slice and dice information from various perspectives, allows ’em to kind of establish that situational ground truth and understand kind of what they wanna believe, what they don’t wanna believe, and really help to feed into that common operating picture that they’re all trying to establish. And at the end of the day, what we’re trying to [00:23:00] do is extract signal from noise. And right now we have an increasingly noisy information environment. And so by providing users these quick, various, reliable, tied to source summaries that allow us to kind of derive those insights that are important for the analysts and operator who understand they can start feeding that data back into that common operating picture to then make the decisions that they wanna make for the next action.

Sean Moriarty: And another example of that is being very quick to surface contested claims, right? So you got two completely different, points of view. It could be with respect to, asset strength. It could be, you know, with respect to troop movement and that is a very clear signal for the user to say, “Hey, wait a second. I got two contested claims here. I’m gonna go, I dig deeper. I’m gonna go to what I believe is the best source of information for this, because I can’t rely on either of these claims, even though I think that claim A might be more accurate. But clearly there’s some conflict and some noise here.”

Heather “Lucky” Penney: So can you provide some real world examples to make it concrete for our [00:24:00] listeners?

Sean Moriarty: This isn’t a contested claims example, but I think it is an example of giving someone the ability to make a decision quickly that has life and death ramifications. So we doing some work with, combatant command in an area of the globe, where we don’t have a lot of infrastructure boots on the ground. And they were monitoring the movements of a rebel group using our tooling. And what they were able to see was that they actually had been moving closer and closer to an area where there were aid workers. And as a consequence of that, they were able to get to the, that group very quickly and tell them effectively to move out of harm’s way.

And in talking with the folks using, Primer, what they basically said was that in a matter of hours, they were able to ascertain this. And if they looked at their prior conventional means of doing this. It would’ve [00:25:00] taken them, I think it was on the order of 50 days. Now this was something that was initiated very shortly after understanding that. So several hours. So very clearly you couldn’t have gotten to that outcome of getting people out of harm’s way using conventional means because you couldn’t plow through all that information and you would’ve missed it.

Heather “Lucky” Penney: Sean, thank you for that because these operational examples are so important, they help us as war fighters understand how AI can help us be better at our jobs and how we can execute our missions more successfully. And one term I’ve kept hearing from both of you is practical AI and we’ve had these conversations prior to this podcast. That’s what I’m drawing from. What do you mean by practical AI?

Sean Moriarty: Alex Karp probably a year and a half ago, said, publicly when, I think probably weary of all of the noise and hype around AI. He said, look, AI is just software that works. And I think what he really pointing at [00:26:00] is, you know, the fact that, you know, we live in a world where people need to use software to be effective in their jobs. And, you know, natural language processing, machine learning, the large language models, these are tools in the toolbox to produce better software that has much greater impact on mission outcome.

And so when we talk about practical AI, we really look at it through the lens of people being able to do their jobs better than they could before. And so, you know, this idea of AI for AI’s sake, and by the way, this is very common at the beginning of any hype cycle, right? If you’re a leader right now responsible for technical implementation, it’s expected that you have an AI strategy.

Now the challenge of course, and you know, with emerging technology is well, what’s gonna have the most impact on my mission? Because saying I have an AI strategy that’s effectively divorced from significant impact on mission [00:27:00] is just box checking. And so practical AI for us, you know, the proof is in the pudding. If people are able to be much more effective in their jobs than they were before. That’s practical AI right? Implementing something that’s not moving the needle either on time spent or mission outcome allowing you, particularly in this environment to do more with less is not terribly practical. Right? And the other thing I’d say is, you know, within that realm of practicality, cost effectiveness matters an awful lot.

Heather “Lucky” Penney: Yeah. I was just gonna say that because I think a lot of people underestimate what the real cost of developing AI tools actually is. As well as, and Sean, you had mentioned this previously, when you’re looking to rightsize the compute power necessary to execute those tools, is the level of SWaP-C necessary to do that as well?

And so if we sort of embark on this overly optimistic [00:28:00] expectations of what AI can deliver, divorced from the current maturity of the AI or the algorithms or what we’re asking them to do devoid of does it, as Sean you said, does it improve mission outcomes? Does it make it faster? Does it make me, um, leaner is it cost effective? I mean, it really just, I think, sets both the humans and the machines up for failure.

Sean Moriarty: the other thing I, you know, call out on that is and I do believe this, you know what we’re talking about, kind of the foundational elements of AI are well implemented in an organization consistent with mission need and a real focus on benchmarking.

Here’s what we’re able to do before, here’s what we’re able to do now. There is extraordinary benefit from intelligent implementation from a cost perspective, from an effectiveness perspective. And you know, the other thing that I’d call out is, you know, the, the innovation in the United States with respect to software is profoundly good. And it’s, you know, the [00:29:00] best deal ever if we get it right for the US government to be a very smart consumer and implementer are these capabilities. Because the other thing I’ll call out this is all of these capabilities. Are funded by venture investment to the tune of now hundreds of billions of dollars.

And then these capabilities are then made available to the government. And if you think about what has been done historically. A ton of custom development, highly fragmented at what I would say much slower pace than commercial sector can move. And certainly much slower than we can move in this AI age. And so we’ve got an extraordinary opportunity that we’ve never really had before to really evolve our capabilities effectively without breaking the bank. Because again, so much of this innovation is private sector funded and with increased focus on delivering these capabilities to government, [00:30:00] which was, you know, if you went back 15 years ago, 10 years ago, even five, there was nowhere near the focus as the government, as a market for cutting edge tech with respect to software.

Heather “Lucky” Penney: Yeah. So I wanna go back to the the contested claims conversation ’cause this is really important because a lot of the information that you’re gaining to be able to, of your unstructured data is open source. What happens when we see adversaries trying to leverage that open source data lake with bad data? Like are they, what, how do we go about filtering out the bad narratives, the bad documentation, the bad data that adversaries might be using to take us in the wrong direction? Corrupt our AI algorithms, lead us to poor decisions. So we’ve got a way to, flag that, Hey, there’s some contested claims going on here, but how do we grade and how will a user know what [00:31:00] they should bias us and what they should wait for?

Sean Moriarty: Yeah. You know, so that, that’s a complicated problem set. And, you know, there are multiple approaches to that. So obviously one source provenance matters an awful lot. Two, you know, making sure, particularly in sensitive environments, that your models are effectively sequestered and encapsulated, right?

So they are not vulnerable to outside, you know, interference or being pumped with bad information. But I think it also speaks to the importance of the quality also of proprietary data, right? So if you, you go back for example, to, you know, the Russian invasion of Ukraine you know, assessing, you know, Russian capability it can be a challenging thing and understanding how well equipped are they in their various movements, approaches and attacks? Um, they’re certainly gonna say one thing. We may know another. How are we [00:32:00] informed and how does that shape our risk calculus? And how much do we wanna weigh What they’re saying or what we’ve seen in their exercises. Versus proprietary intelligence that we may have about them and what’s the difference and where do we wanna land on that risk continuum? You certainly, no one wants to underestimate an adversary. But overestimating an adversary is not without consequence either.

Heather “Lucky” Penney: That’s definitely true. Yeah. And that’s a, I think, and one reason why the approach that you take towards your products is really important. Understanding that trade craft, getting the workflows, really understanding the mission need and the context of that mission, and then being able to provide those benchmarks. That to me seems like a very rational and very useful way to apply AI, but we’ve gotta get it in the hands of the war fighter in operational exercises and in that real world more broadly, can you talk to us about how you’re deploying, uh, some of your tools for war fighters today?

Sean Moriarty: Sure. So, you know, we, we will [00:33:00] move as quickly as a customer is capable of moving, whether that’s, spinning up, a prototype for them quickly, the ability to work in any environment that they’re operating in. Kind of, I would say independent of, acquisition vehicles and contracting and the complexity there.

Um, you know, we need to be, you know, we want to be able to deploy as quickly as possible if we get into the right environment. You know, we can get up and running in a matter of weeks rather than months. And that’s also core to the way we think about building our products and deploying our products and doing a lot of tooling.

Because you’re also talking about, gotta be able to comport with security policy and governance. And so you can’t just have a great capability that is by itself not capable of being deployed into these environments. So a lot of the tooling that would be kind of much less interesting to the end user is a significant area of investment for us.

Heather “Lucky” Penney: Yeah. That authority to connect, that authority to operate, the fact that you [00:34:00] understand the limitations that the government users, that military users DOD users have is really important. And you’ve developed that knowledge through decades of experience with the IC community. So you’re talking about deploying these tools in weeks. Do you have sort of like basic platforms that you can then, that you then adapt and layer and integrate? Or are you developing everything from scratch?

Sean Moriarty: No. So we are a software product company and you know, our Primer enterprise platform is the basis for that. Mm-hmm. And what we build out on top of that, you can think about them as distinct capabilities.

We refer to them internally as assets. And those assets that we talk about. It could be maps, it could be events, but some end user capability. That is built on the platform and the customer based on their needs, has the ability to pick and choose amongst those assets and we can make sure that they’re getting them [00:35:00] delivered in a workflow consistent with their needs. So again, you know, we’re a products company that will provide as high touch services necessary to accomplish the mission, but that product mindset is at our core. So a high degree of, repeatability and deployment of these capabilities. You know, the idea that we can ship primary enterprise to a hundred customers that may have different needs, they have the ability to pick and choose amongst the assets they need to do their job. But also, keep in mind, you know, at the foundation we’re talking about ingesting this, unstructured data from any source. And doing all the work we need to do so the user can interact meaningfully with that.

The foundational capability is search, which is part of the enterprise platform. And again, you know the key to effectively unlocking insights. All starts with a natural language search or a Boolean search. It’s really up to that, [00:36:00] user and then they can go on that journey consistent with what their job is.

And then, that asset library is what allows them to have that robust experience. And, you know, as we layer in these capabilities, we can just cover more surface area of user need over time. So something that we may develop specific to a single customer in most cases becomes part of that asset library and then is available to others. There are certainly some cases where we will do something specific to customer need which will remain with that customer, but mostly the work we’re doing on assets has brought applications.

Heather “Lucky” Penney: Leonard, I’d really love to hear what, oh, sorry. I wanted to bring you in the conversation so that jumping in was perfect. Go ahead.

Leonard Law: Yeah, the, the one thing I would add to what Sean just said. Sean used the word modularity report, right? Modularity is one of the keys to unlocking our ability to deploy effective solutions for our customers very rapidly. And I do wanna dig in a little bit to that product orientation that, that Sean [00:37:00] talked about, because we have a very deep belief that we can drive the best outcomes for our customers by investing continuously in a core product versus building bespoke solutions for every customer that we have. And so reinvesting and re continuously investing in a core set of capabilities and assets that Sean talked about is really important for us to build the best capabilities for our end users.

Heather “Lucky” Penney: That’s sort of the rising tide lifts all boats, type of analogy, which I had to use a naval analogy for an airpower podcast, but hopefully our listeners will forgive me. So I understand why that makes sense to invest in the enterprise. Are you doing some kind of on the job training for your AI, if you will? How are you continuing to improve those products? Leonard?

Leonard Law: Yeah, so we spend a lot of time and as much as we are a product company, a very important element of our business is our four deployed engineers and our staff and field engineers that are out working closely with customers to understand their data, integrate with their data sets, and make sure that our models are [00:38:00] fine tuned appropriately to kind of provide the best results for the day they have at hand.

So, absolutely, we do spend quite a bit of time it’s not a turnkey, you know, you don’t insert a CD into a computer and expect to install. This is something that is absolutely enterprise software that requires close collaboration between Primer and its customers to make sure that we have optimized it for their specific mission needs.

Heather “Lucky” Penney: Yeah. And that’s part of understanding the trade craft and the workflow and what they’re really needing to get out of that. I’d like to go back to the environment. Sean, you had mentioned this, and Leonard you did as well, about really understanding how the end user what they’re actually living in. Do they prioritize speed? Do they have, compute constraints? Do they have power generation constraints? What kind of reach back do they have? How do you make that AI practical in those environments?

Sean Moriarty: Yeah, so that discovery process is really done, with our sales engineers, you know, working with AEs as we’re sitting and talking to a customer about the realities of their environment. We can stand up on bare metal, we can plug [00:39:00] into existing platforms, we can certainly run in their cloud environment, but that’s part of the discovery process with the customer. You know, what are, what, what are the constraints of the environment? Is it compute? Is it that you’re operating in a harsh environment and you have sporadic connectivity? And on that basis, we will figure out with the customer the right approach for them. So, we’re hitting that sweet spot, given the constraints, the mission needs, and what we believe in working with the customer is gonna give them the best mission results. And that goes all the way down to the models that they, you know, choose to use.

Certainly with our input. The other thing I’d call out is we, you know, we have an applied research team and the applied part is the most important part of that. So it’s not pie in the sky, theoretical research, but in a world where innovation is happening almost by the day and AI. These are really sharp engineers who are, have deep understanding of what we’re solving [00:40:00] for customers, what their needs are and where things are going in the future. So we have these really tight iteration loops where they’re learning about something that they’ve been researching and that capability’s going to be hitting in a matter of weeks or months.

And it’s something that we’re gonna be on early. We can test the heck out of it in the lab and we can see if it’s going to eek out performance benefit for real customer need. Right? And so, I mean, it’s our job to do two things. One, leverage our deep expertise as technologists so that we can bring our customers into the future.

But two, always working of our standpoint of a deep understanding of how they work every day and what they need to be successful. Like that’s really, the art of it, but that, you know, again, there are things we can do now that you couldn’t be done three months ago. And that’s gonna persist for the next several years at least.

Heather “Lucky” Penney: So gentlemen, you know, you’re working [00:41:00] in the DOD environment and with INQTEL, you’ve been there for a long time. But everyone knows that, the government is incredibly onerous to work with and that the timelines of development and acquisition are at a geologic pace, especially when you compare that to what VCs venture capitalists are expecting and what Silicon Valley and what the commercial civil market is capable of doing.

But we also have seen this administration make significant changes in strides in how they’re approaching software acquisition. And frankly, we’ve been seeing this for several administrations previously. Can you comment on the broader trend lines that you’re seeing, how that’s impacting the AI market space as well as your company?

Sean Moriarty: Yeah, so I think the administration is very clearly not only kind of on the right side of this particular issue, but has been very clear in articulating the criticality of adopting best of breed commercial software against mission. And you know, we’ve [00:42:00] also seen that manifest over the course of the past several years with the rise of, you know, DIU and so many different programs where, the government outreach and the invitation to the commercial sector is probably in order of magnitude greater than it’s ever been before.

Now, the culture changes slowly, but if I think about it from a standpoint of direction from the administration and leadership and attitude, all the signs are there that we’re moving in the right direction. Now, at the same time, again, you know, when you’re dealing with a huge bureaucracy that has done things a certain way and if the way, you know, the way to think about acquisitions, it was for a world that was substantially hardware heavy and programs took forever, and software can be deployed very quickly and improve very quickly.

You know, it is gonna take a bit of time, but at the same time there’s more momentum. There’s certainly more [00:43:00] buy-in. And the administration has also been very clear about the criticality of moving in this direction and we’re certainly seeing that in customer interactions. Although always I, you know, I’d love us to be going much, much faster than we’re going today. We certainly need to.

Heather “Lucky” Penney: Leonard, any thoughts on that?

Leonard Law: No, I think Sean’s conclusion is exactly where my head is at, which is really just we see great signs, but we’d like to see it accelerate.

Heather “Lucky” Penney: Okay. This next question about the notion of complete cost. I think you both addressed this when you talked about your research and development arm and how you are, investing in that to, take advantage of the most recent developments that have come out of, the broader AI community to deliver that to your customers, as well as investing in the enterprise platform as opposed to just becoming overly tailored, customized to one particular end user, and then having to reinvent that every time you have another end user.

What [00:44:00] about the notion of complete cost? Because we’ve seen from other AI companies, that they have concerns that the US government doesn’t fully understand the cost of developing AI for particular mission sets.

Sean Moriarty: Yeah. Look I think it’s important to address, I’d say, on one hand, I do believe there’s truth in that. On the other, I think, that’s more our problem than the customer’s problem. And that one of the beauties of innovation is the force and function of customer demands, not just in capabilities and requirements, but cost effectiveness.

And, you know, it’s our job to deliver the greatest capability at the best possible price we can for the customer. And in so doing, we also need a sustainable business model. I think one of the things though that’s hidden in this, so certainly understanding, you know, the total cost of ownership is something we’re very focused on.

It’s part of our discovery process. We don’t want the customer to be surprised. We certainly don’t want to be surprised. And so we’re very [00:45:00] mindful of that going in. But I think a probably a greater problem is, you know, we have got a real legacy technology challenge across the federal government. Which is, you know, you’ve got systems that are decades old. That are mediocre at best, that are really expensive to maintain. And the transition is not easy, right? Because people need to get their work done as they’re transitioning to new capability. And I think that’s probably more of a negating issue, right?

Which is effectively new start costs at a time of transition because I mean, well, we can, you know, we can deploy quickly if an environment is effectively ripe for that. Someone may have the ongoing carrying costs of an inferior solution that they’re tethered to for much longer than they’d like. And very few systems can come in and, wholesale replace, uh, without interruption of service.

So that transition time and the [00:46:00] carrying cost of, you know, inferior legacy systems, I think is actually the greater part of the problem.

Leonard Law: Yeah, I mean, the only thing I would add to that is this is why it’s so important now for the government to be focused on product acquisition as opposed to services acquisition.

Because to Sean’s point, it is incumbent on us as product builders and vendors to continue to keep pace with the market. You know, right now AI is doubling in capabilities every few months, and it’s also having a cost every few months. In order for products to stay afloat and to take advantage of those things, we need to invest continuously in our product.

And that would just not be the case in a services oriented AI deployment.

Sean Moriarty: And that, that bridge, that bridging exercise is gonna be an important one for the government to be mindful of and get very good at figuring out, you know, working with the private sector because Leonard calls out a very important point, which is, the beauty of technology is it gets more powerful and cheaper, you know, on a regular [00:47:00] basis.

And but you wanna be on the right side of that curve and so are we willing to incur the near term cost to accelerate transition and transformation? Because the faster we go, the more money we’ll save. But early in that transition, you’re gonna have some incremental spend and will we be able to find that funding for those most critical mission sets to actually go faster. And that’s, you know, what we’re certainly going to see. Obviously you’ve got a flat DOD budget for FY 26, but you’ve got about $150 billion on a one-time basis of reconciliation money. And I think if those funds are thoughtfully deployed, you can significantly accelerate pace.

Heather “Lucky” Penney: It’s really interesting that bridging exercise that you talked about, Sean. I think of that often especially with respect to the Air Forces need to recapitalize. So it’s, I think, a very apt analogy of having old, outdated hardware and software where the, uh, the Department of Defense is holding onto orphan [00:48:00] languages and outmoded processors and so forth, and being able to, and the needing to jump on board to new systems, new hardware, new software. But there’s gonna have to be that overlap ’cause guess, guess what? You know, the sun never sets.

Sean Moriarty: I do think that one thing that, you know, every, you know, leader in this area needs to be thinking about on the government side is as I’m bringing on new capabilities, what am I going to be sunsetting? And that should actually be part of their thinking.

And if it is just direct incremental costs, unless there is clear mission advantage to bringing on something new, but it can’t replace something else, we gotta be very mindful that we don’t allow out of this, the legacy environment to persist any longer. And that we have even further proliferation of tech because the environments are already unwieldy and unmanageable and, you know, if not done right, you can make that worse.[00:49:00]

Heather “Lucky” Penney: I think I see everybody nodding their no and including all of our listeners. So, what would you want war fighters and senior decision makers in the Department of the Air Force and the DOD to know about these AI tools and the small companies that are often at the bleeding edge of technology? What words of wisdom would you pass to them so they can optimize and scale AI in their mission execution?

Sean Moriarty: I think that two things. One AI done right, which is really software done right is increasingly powerful and the innovation going on in the private sector is extraordinary. And two to spend ample time really thinking about what can be transformative to mission outcome, and to seek out and challenge these companies like ours, like Primer that are doing this work, and be very clear about what you need for mission outcome, and [00:50:00] challenge us because I know the private sector is ready, willing, and able to rise to the occasion.

And I certainly know we are.

Leonard Law: I echo what Sean said. You know, I think it’s important to find AI partners that are. Driven to support mission outcomes and, and to remember that, you know, companies like Primer are there to support the war fighter and to help supercharge their abilities as opposed to try to replace them. And then I think I would call out right, is that to remember that our adversaries are adopting new technologies and AI rapidly. And so it’s incumbent on us to kind of stay abreast of the advancements of capabilities that will help empower, you know, our domestic force.

Heather “Lucky” Penney: Thank you. So, Sean, what you said about AI done right, software done right and needing to be transformative I think is really key there. Uh, ’cause we can’t just do AI for AI’s sake. So in the name of doing it right and being transformative, I’d like to hear from each of you where we should be in five and then 10 years. Paint a picture to help us understand how we should grade the homework of that progression. You know, [00:51:00] our listeners read the news and in the coming years, how should they assess what good looks like when it comes to AI progress?

Sean Moriarty: It is always a great question to ask, what, where do we want to be out into the future? Because you need to be able to work towards that, and you have to have a goal, if you have any hope and heck of getting there. You know, I would say, you know, one way to think about it, and I think benchmarking is really important and I think that, you know, the federal government can do a much better job of benchmarking the quality and efficacy of systems. But if you think about it right now, I think, you know, in the DOD think it’s got a widely accepted that, somewhat less than 5% of all unstructured data sitting in DOD repositories broadly is accessible and actionable. I don’t know what the right number of that should be when you look out five to 10 years, but we should have an aggressive goal to get to 50% of that data being actionable within five [00:52:00] years, and darn close to all of it within 10. Because if you think about what’s happening, so certainly you’ve got increasingly powerful software that has the ability to ingest and make sense of that data. Companies like ours and hardware is getting cheaper, AI is getting more powerful and cheaper.

And you know, we should look and say, if we truly believe data is a profoundly valuable information asset, as long as it’s not inert and just sitting on a file system, then we should have aggressive goals to make, you know, every bit useful to us. And it may be that X percent of it is junk and that’s fine, but we should be able to know that rather than guess at that because of our ability to interact with that. The second, you know, thing I’d speak to is we should, assess baseline capabilities, you know, against these functions. So how long does it take for an analyst to complete a piece of finished intelligence and [00:53:00] how many inputs go into that and can we cut that in half over the next five years?

With double or triple the number of inputs. If we look at you know, common operating picture, situational awareness, can we assess baseline robustness against a variety of scenarios today, recognize the deficiencies in that, and then benchmark something that’s materially better than that could be achieved by the deployment of high quality software on top of really robust IT infrastructure.

Heather “Lucky” Penney: So Leonard, I’m gonna ask you to answer this question slightly differently. What warning, would signal that we’re missing the mark, especially as we look to keep an edge over our adversaries?

Leonard Law: So, I think there’s a couple things there, right? One thing is when we look at the future and I tell this to my team all the time, it’s a fool’s errand to try to predict the future. More than six months out, this AI space is evolving so rapidly that it’s almost impossible to kind of know what’s to come next. [00:54:00] I do think though, that looking at where AI can be effective is kind of critically important. And, you know, we’ve talked a little bit about the OODA loop before and I would be worried if in the future we’ve led to a world of complete autonomy of the AI to kind of do the full loop. I think it’s important for the human to always be in and on the loop. However, I do see a world where the AI can increasingly start supporting other parts of that loop, right? So supporting decision making not replacing decision making, but supporting decision making. So how can we get to a point where we’re not just sensing, making sense, but also helping the war fighter to decide kind of what are the best courses of action he or she should take? How should I evaluate those against the data that’s available to me and the insights that come to, to become aware of and so on.

Heather “Lucky” Penney: Well, gentlemen, thank you so much. I know you both have super busy schedules and we definitely appreciate the time that you took to share your industry perspective with us.

It’s been a really fascinating conversation. I only wish that I had your AI tools when I was in the [00:55:00] NMCC. Again, thank you.

Sean Moriarty: Thank you so much. It’s a pleasure and a privilege to be here. We’ve enjoyed the time. Thank you so much.

Heather “Lucky” Penney: With that, I’d like to extend a big thank you to our guests for joining in today’s conversation.

I’d also like to extend a big thank you to you, our listeners, for your continued support and for tuning into today’s show. If you like what you heard today, don’t forget to hit that like button or follow or subscribe to the Aerospace Advantage. You can also leave a comment to let us know what you think about our show or areas that you would like us to explore further.

As always, you can join in on the conversation by following the Mitchell Institute on X, Instagram, Facebook, or LinkedIn, and you can always find us@mitchellaerospacepower.org. Thanks again for joining us and have a great aerospace power kind of day. See you next time.

Credits

Producer

Shane Thin

Executive Producer

Doug Birkey